OpenStack Liberty Private Cloud HowTo

- This howto is a work in progress. It will change frequently

- This how-to uses the default ansible playbooks with as little modification as possible.

- This how-to assumes that you have a more than basic understanding of linux, openstack and networking {assumption is the mother of all …. }

- This how-to attempts to cover OpenStack from start to end and not leave you at this point: “so OpenStack seems to be installed and i am logged in as admin to horizon .. now what? “

- All machines (virtual and physical) for simplicity are named; starting from c11, c12, c13 and so on.

[If you really really, and I mean really really want to know why it does not start with 1 …. then here it is.. because .1 is used in the vyos router, .2 goes to br-mgmt in the host computer {otherwise the deploy lxc machine cannot reach any containers on the management network } and I tend to use .3 and .5 for the internal and external load-balanced IPs .. to preserve my sanity, and yours, the machine starts at 11 .. so c11 will have 10.11.12.11 , 172.29.236.11, 172.29.240.11 and 172.29.244.11 as its ssh, management, vxlan and storage ip respectively ] - I can be reached as admin0 on freenode #openstack-ansible channel [business hours, central european time]. Feel free to communicate there.

- All config files can be found on https://github.com/a1git/openstack-cloud

How-To Items:

- High Level Architecture Overview

- Configure Dev/Test single server setup

- Run OpenStack Ansible Playbooks

- Ansible Install – Default

- Ansible Install – use NFS for storage

- Ansible Install – use CEPH for storage

- Anisble Install – Add swift object storage

- Post Install Steps

- Add Floating IPs

- Add Direct DHCP IP network

- Add OS images

- Affinity and Anti-Affinity

- Map flavors to specific nodes

- Map tenants to specific nodes

- Replicate the same to Production

- Connect your Private Cloud with Office – VPN and Routing

- Connect your Private Cloud with AWS and Google Cloud

—

Networking and VLANs

You need to allow your colleagues in your office to be able to create an instance and be accessible from the whole office… just create a VM .. share the IP and viola .. everyone can reach it. .. So this is direct dhcp .. just create an instance in the right network and forget about it. Lets remember this as “DHCP” range

You also need your colleagues to create instances in a special network range so that it can me made accessible from outside (using VYOS) …. Lets remember this as “Floating” range

The playbooks need the following ranges

- 172.29.236.0/22 – Managemnet

- 172.29.240.0/22 – VxLAN

- 172.29.244.0/22 – Storage

On top of that, I will add the following ranges:

- 10.11.12.0/22 – host management, servers SSH

- 192.168.101.0/24 – for floating IPs

- 192.168.201.0/24 – for DHCP IPs

VLANs

Here is my VLAN configuration

- Primary VLAN = 10.11.12.0/22 for host management

- VLAN 10 = Management

- VLAN 20 = VxLAN

- VLAN 30 = Storage

- VLAN 101 = 192.168.101.0/24 – Floating IPs

- VLAN 201 = 192.168.201.0/24 – DHCP IPs

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.11.12.11

netmask 255.255.252.0

gateway 10.11.12.1

dns-nameservers 8.8.8.8

auto eth0.10

iface eth0.10 inet manual

auto eth0.20

iface eth0.20 inet manual

auto eth0.30

iface eth0.30 inet manual

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth0.10

address 172.29.236.11

netmask 255.255.252.0

auto br-storage

iface br-storage inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth0.30

address 172.29.244.11

netmask 255.255.252.0

auto br-vlan

iface br-vlan inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth0

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth0.20

address 172.29.240.11

netmask 255.255.252.0

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 10.11.12.11

netmask 255.255.252.0

gateway 10.11.12.1

dns-nameservers 8.8.8.8

auto eth1

iface eth1 inet manual

auto eth2

iface eth2 inet manual

auto eth3

iface eth3 inet manual

auto eth4

iface eth4 inet manual

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth1

address 172.29.236.11

netmask 255.255.252.0

auto br-storage

iface br-storage inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth2

address 172.29.244.11

netmask 255.255.252.0

auto br-vlan

iface br-vlan inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth3

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports eth4

address 172.29.240.11

netmask 255.255.252.0

Do you need the 10.11.12.x range ? not really. you can just configure eth0 to be on br-mgmt and use the management IPs there. however, your (network) guys or policies might say that you need to use X range to reach the servers. So this is entirely upto you.

Before you start, ensure that the server (deploy machine ) from where you are going to run the ansible playbook is able to do a ssh to all the other servers

ssh 10.11.12.11 ; ssh 10.11.12.13 or ssh 172.29.236.11 ; 172.29.236.12 etc ..

Follow the openstack install guide to setup the deploy host [http://docs.openstack.org/developer/openstack-ansible/install-guide/deploymenthost.html] [sorry for the screenshots, wordpress not handling spaces well]

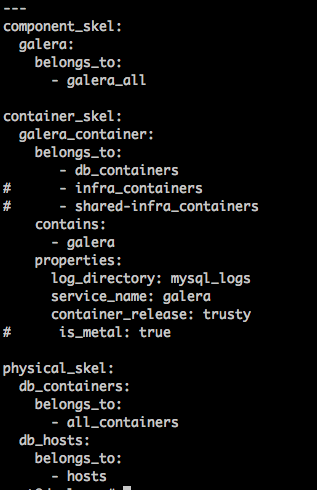

To have galera, memcache and rabbitMQ to run on seperate host, we edit the file and make the following changes:

/etc/openstack_deploy/env.d/galera.yml

What we do is remove the mapping from from infra_containers and shared_infra_containers to this new group called db_containers, and then below define that db_containers must be on a new group called db_hosts .. .. for you this can be data_hosts, network_hosts, keystone_hosts, unicorn_hosts etc ..

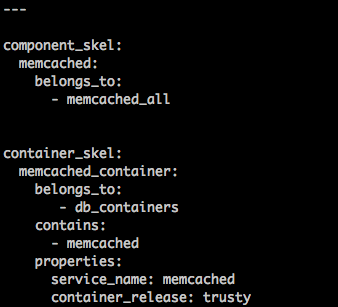

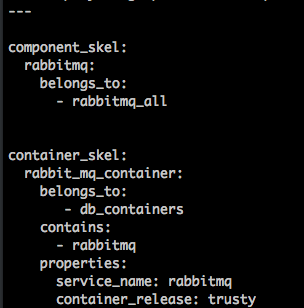

Since db_hosts is already defined here, we just point the memcache and rabbitMQ to this new host.

/etc/openstack_deploy/env.d/memcache.yml

/etc/openstack_deploy/env.d/rabbitmq.yml

Now to the config /etc/openstack_deploy/openstack_user_config.yml

[available at: http://openstackfaq.com/openstack_user_config.yml ]Make sure to change the

my /etc/openstack_deploy/user_variables.yml has the following additons:

haproxy_use_keepalived: true

haproxy_keepalived_external_vip_cidr: “10.11.12.3/22”

haproxy_keepalived_internal_vip_cidr: “172.29.236.3/22”

haproxy_keepalived_external_interface: “eth0”

haproxy_keepalived_internal_interface: “br-mgmt”

make sure you setup the DNS properly.

When that is done, run the playbooks: setup-hosts => haproxy-install => setup-infrastructure => setup-openstack

You should get a working openstack, and you should be able to login to horizon as admin, and also be able to login to the utility container, source openrc file and be admin

root@c14_utility_container-b2d361f2:~# openstack service list +----------------------------------+----------+----------------+ | ID | Name | Type | +----------------------------------+----------+----------------+ | 117f8c9bc385439a951ca20d12e0b89f | nova | compute | | 12e8f0b577384a2a88855696e8965e7a | heat-cfn | cloudformation | | 3e8de5211e6b46299ec4908c2382fa85 | glance | image | | a0af365f9a0d48ca86aa3353425d4c8c | heat | orchestration | | ac8ce0b4df12463db435c5c29941f2d2 | keystone | identity | | c06057738a2d4191ba3faea4142e13f6 | cinder | volume | | d9145090aef3412fbd80ac0ba3c3d700 | neutron | network | | f94dcd17e1474f7cbbae75c7d5739a5b | cinderv2 | volumev2 | +----------------------------------+----------+----------------+